MS Learn material

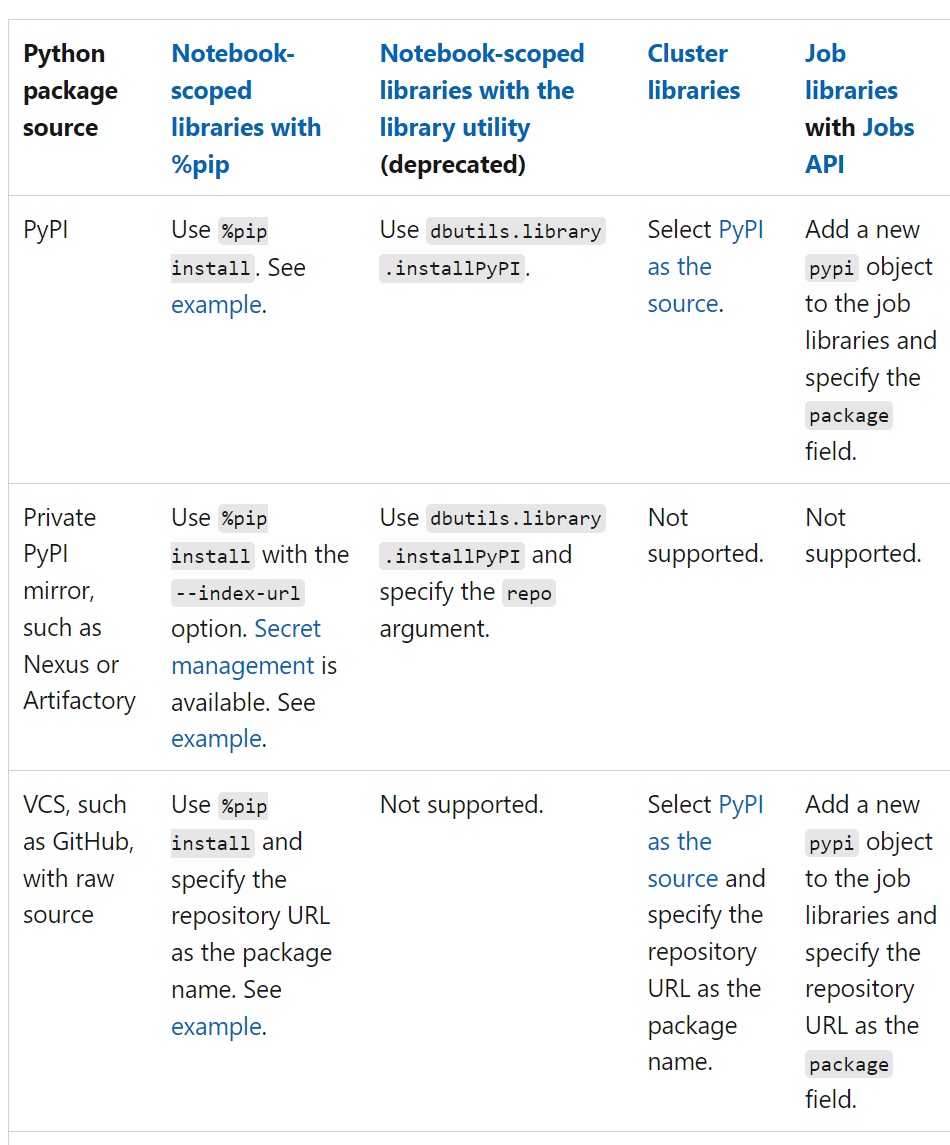

You can have different scopes when intalling libraries to your Databricks notebook/workspace:

Databricks is capable of mounting external/remote datasources as well.

DBFS allows you to mount storage objects so that you can seamlessly access data without requiring credentials. Allows you to interact with object storage using directory and file semantics instead of storage URLs. Persists files to object storage, so you won’t lose data after you terminate a cluster.

- DBFS is a layer over a cloud-based object store

- Files in DBFS are persisted to the object store

- The lifetime of files in the DBFS are NOT tied to the lifetime of our cluster

Databricks Utilities - dbutils

- You can access the DBFS through the Databricks Utilities class (and other file IO routines).

- An instance of DBUtils is already declared for us as dbutils.

The mount command allows to use remote storage as if it were a local folder available in the Databricks workspace

dbutils.fs.mount(

source = f"wasbs://dev@{data_storage_account_name}.blob.core.windows.net",

mount_point = data_mount_point,

extra_configs = {f"fs.azure.account.key.{data_storage_account_name}.blob.core.windows.net": data_storage_account_key})

%md

The mount command allows to use remote storage as if it were a local folder available in the Databricks workspace

dbutils.fs.mount(

source = f"wasbs://dev@{data_storage_account_name}.blob.core.windows.net",

mount_point = data_mount_point,

extra_configs = {f"fs.azure.account.key.{data_storage_account_name}.blob.core.windows.net": data_storage_account_key})