Installing Kafka in DO

Avro schema file for the data you will produce to Kafka:

cat << EOF > ~/temperature_reading.avsc

{

"namespace": "io.confluent.examples",

"type": "record",

"name": "temperature_reading",

"fields": [

{"name": "city", "type": "string"},

{"name": "temp", "type": "int", "doc": "temperature in Fahrenheit"} ]

}

EOF

Consumer

$ confluent local services \

kafka consume temperatures \

--property print.key=true \

--property key.deserializer=org.apache.kafka.common.serialization.StringDeserializer \

--value-format avro

note: confluent local services kafka knows to look for Kafka at localhost:9092. The print.key=true property means we will see each event’s key in addition to its value. We provide a deserializer for the key. The –value-format avro means we are expecting Avro serialized data for the value.

Producer

$ confluent local services \

kafka produce temperatures \

--property parse.key=true --property key.separator=, \

--property key.serializer=org.apache.kafka.common.serialization.StringSerializer \

--value-format avro \

--property value.schema.file=$HOME/temperature_reading.avsc

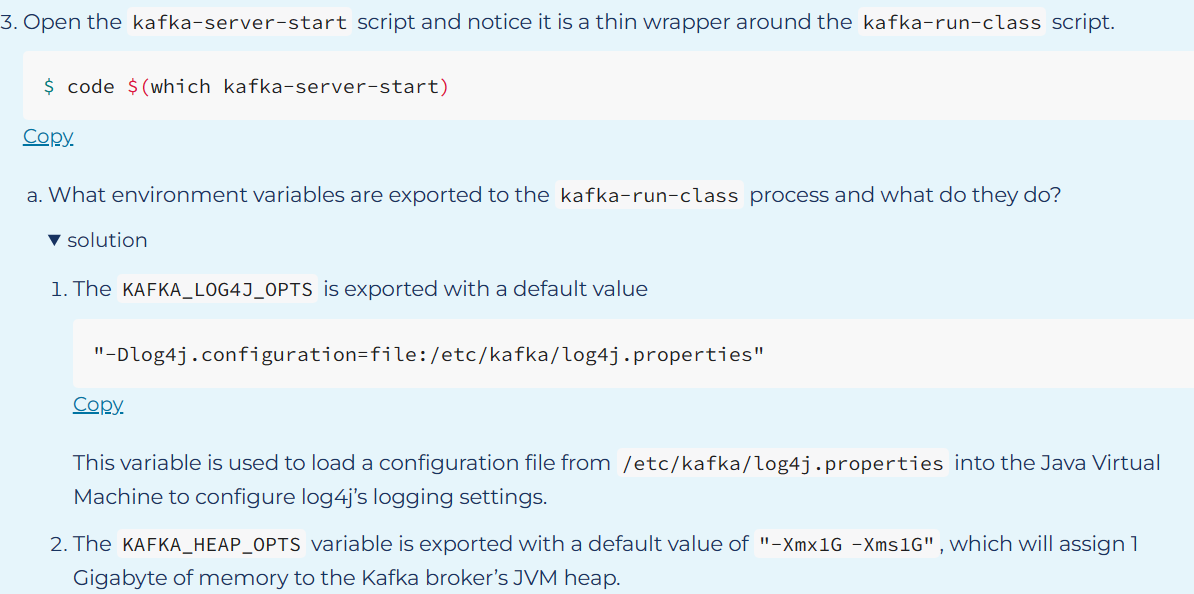

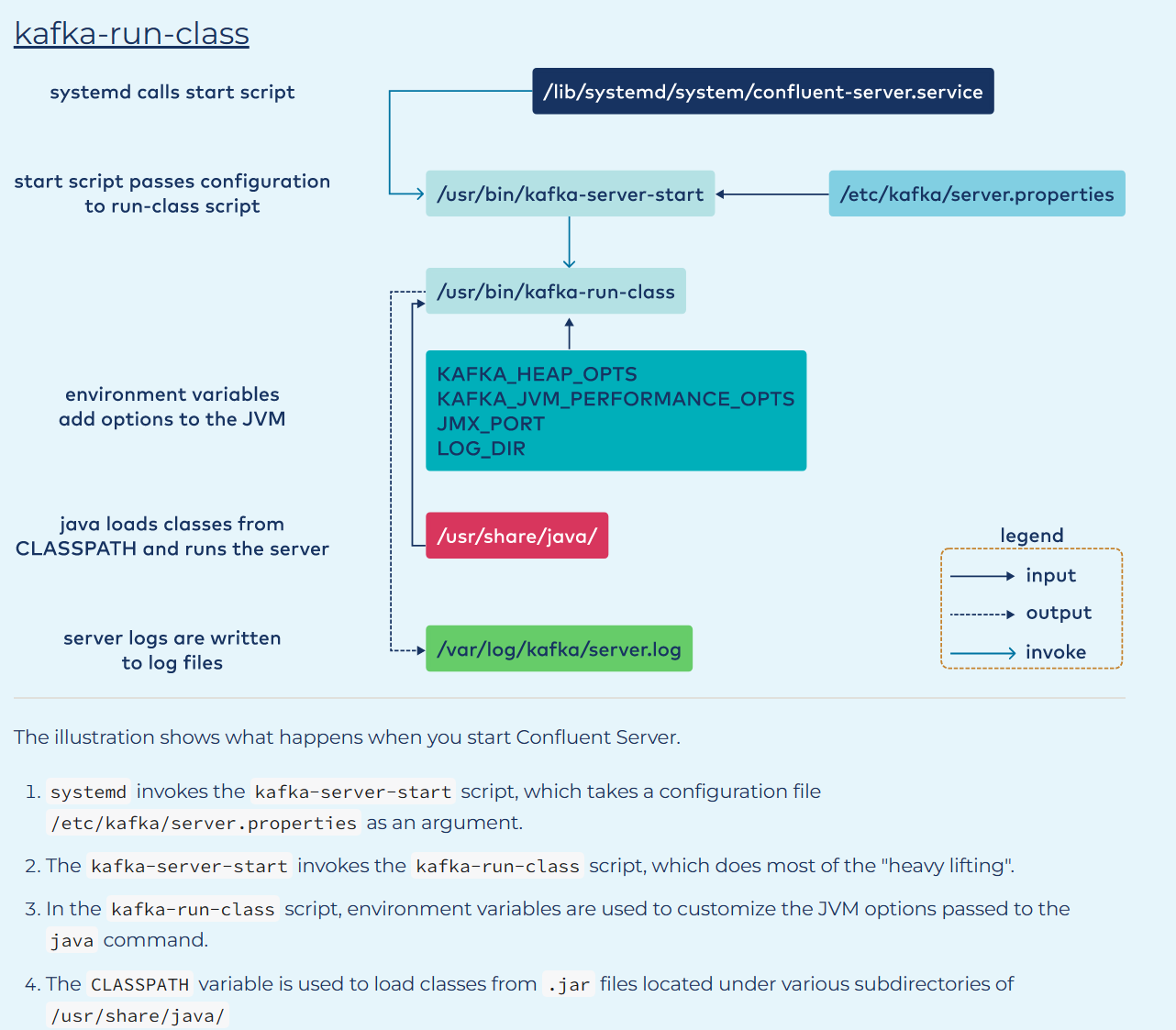

Explore run-class scripts and env variables

Kafka and Confluent components are governed by run-class scripts that essentially feed various command line options to the java command.

$ systemctl cat confluent-server

outputs:

# /lib/systemd/system/confluent-server.service

[Unit]

Description=Apache Kafka - broker

Documentation=http://docs.confluent.io/

After=network.target confluent-zookeeper.target

[Service]

Type=simple

User=cp-kafka

Group=confluent

ExecStart=/usr/bin/kafka-server-start /etc/kafka/server.properties

LimitNOFILE=1000000

TimeoutStopSec=180

Restart=no

[Install]

WantedBy=multi-user.target