Rancher struggles

For whatever reason K3d has a low level running traefik pod (resulting is some odd modprobe errors that I don’t even want to try to fix.) So, today I installed the K3s (instead of the k3d which runs in docker). It seems to have no problems with traefik, so going to try to install Rancher on it, but now Rancher website is down so waiting to get to read the logs

(NOTE: Got a call from Suse today.. few days I got TWO calls from databricks.. simply because I’m browsing their tutorials. Please, stop the damn direct marketing calls, its insane!!!)

Sidetracks

I thought it would be nice to have a personal persistent storage, so was thinking of buying NAS for that. Seems its going to be bit more complicated, and I might have to setup a nas CSI (container storage interface), storage classes etc., so thats probably going to be one deep rabbit hole again.

Sidetrack 3 : Venvs

As I was planning to start yet another Python project I realized that I’m having way too many venvs scattered everywhere (I usually create a ‘dedicated’ venv in the project dir.) So now Im looking into virtuanenvwrapper to store the venvs in sensible place, and also use same venv in similar projects so I dont have to install pytorch etc everywhere costing my precious SSD space.

Ranch is ready!

Finally, the local rancher is running:

Back to DBT studies

Models

- Models are .sql files that live in the models folder.

- Models are simply written as select statements - there is no DDL/DML that needs to be written around this. This allows the developer to focus on the logic.

- In the Cloud IDE, the Preview button will run this select statement against your data warehouse. The results shown here are equivalent to what this model will return once it is materialized.

- After constructing a model, dbt run in the command line will actually materialize the models into the data warehouse. The default materialization is a view.

-

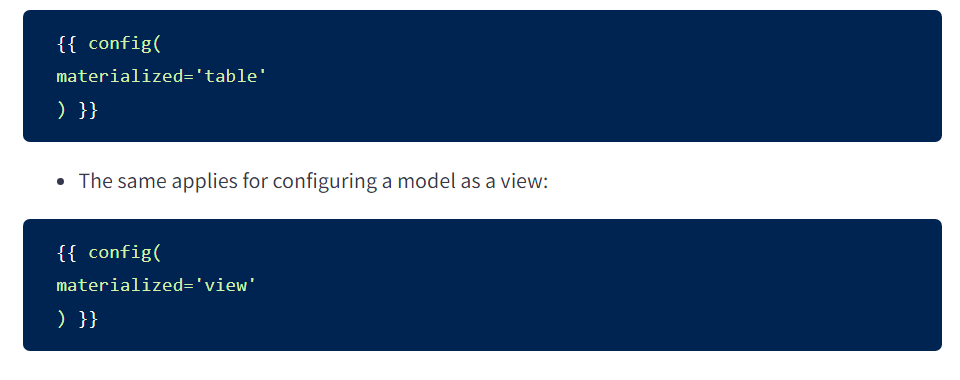

The materialization can be configured as a table with the following configuration block at the top of the model file:

-

When dbt run is executing, dbt is wrapping the select statement in the correct DDL/DML to build that model as a table/view. If that model already exists in the data warehouse, dbt will automatically drop that table or view before building the new database object. *Note: If you are on BigQuery, you may need to run dbt run –full-refresh for this to take effect.

- Models can be written to reference the underlying tables and views that were building the data warehouse (e.g. analytics.dbt_jsmith.stg_customers). This hard codes the table names and makes it difficult to share code between developers.

- The ref function allows us to build dependencies between models in a flexible way that can be shared in a common code base. The ref function compiles to the name of the database object as it has been created on the most recent execution of dbt run in the particular development environment. This is determined by the environment configuration that was set up when the project was created.

- Example: compiles to analytics.dbt_jsmith.stg_customers.

-

Naming Conventions In working on this project, we established some conventions for naming our models.

Sources (src) refer to the raw table data that have been built in the warehouse through a loading process. (We will cover configuring Sources in the Sources module) Staging (stg) refers to models that are built directly on top of sources. These have a one-to-one relationship with sources tables. These are used for very light transformations that shape the data into what you want it to be. These models are used to clean and standardize the data before transforming data downstream. Note: These are typically materialized as views. Intermediate (int) refers to any models that exist between final fact and dimension tables. These should be built on staging models rather than directly on sources to leverage the data cleaning that was done in staging. Fact (fct) refers to any data that represents something that occurred or is occurring. Examples include sessions, transactions, orders, stories, votes. These are typically skinny, long tables. Dimension (dim) refers to data that represents a person, place or thing. Examples include customers, products, candidates, buildings, employees. Note: The Fact and Dimension convention is based on previous normalized modeling.

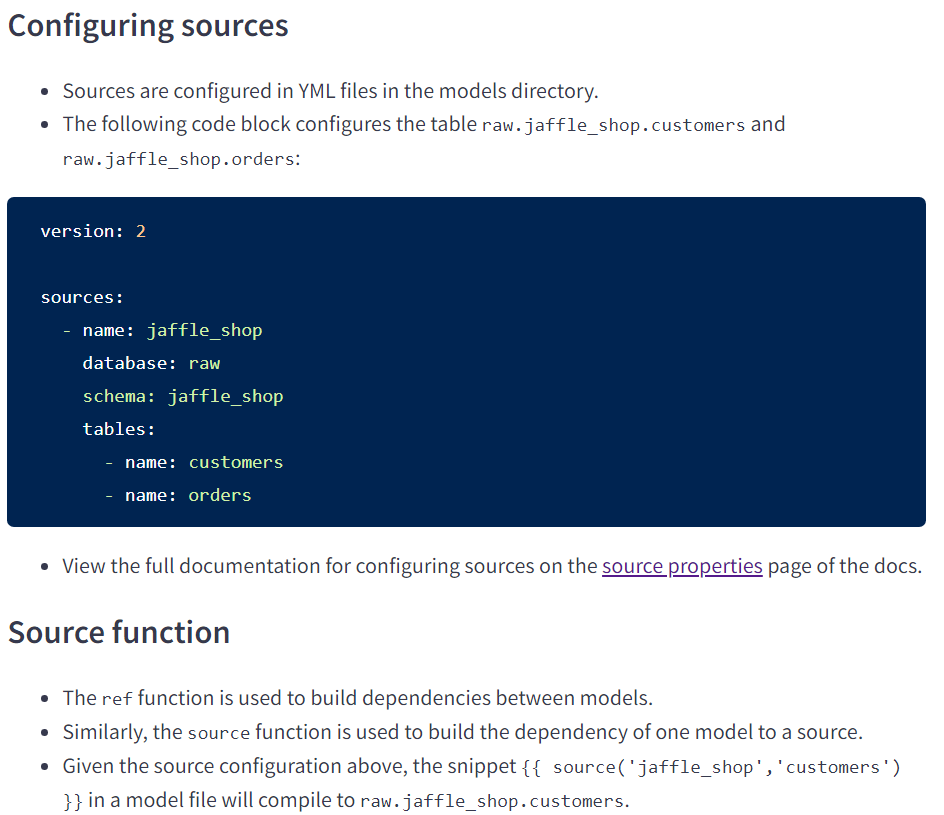

More on sources

Redshift + S3 for time-series data

https://github.com/garystafford/kinesis-redshift-streaming-demo